Creating an Orientation Matrix or Local Coordinate System

Reading time: 7 mins.Constructing a Local Coordinate System from a Normal Vector

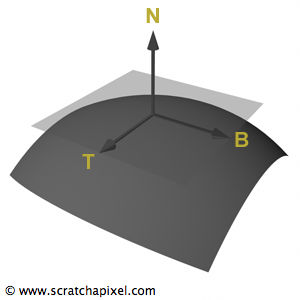

Throughout this chapter, we'll apply our understanding of coordinate systems to craft a local coordinate system (or frame) based around a vector, which can often be a normal. This method is frequently utilized within the rendering process to transition points and vectors from one coordinate system to another, positioning the normal at a given point as one axis of this local frame. This axis is typically aligned with the up vector, while the tangent and bi-tangent at that point establish the remaining orthogonal axes.

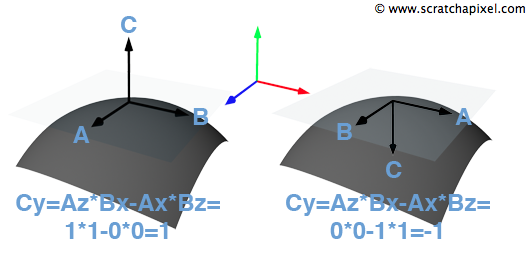

To form such a local frame accurately, one should employ the surface's normal, tangent, and bi-tangent at point P. These vectors lie within the plane tangent to P on the surface and must be orthogonal and normalized. In lessons on ray-geometry intersection calculations, the discussion will extend to computing derivatives at the intersection point—referred to as dPdu and dPdv. These derivatives provide a technical means of defining the tangent and bi-tangent at P. The normal at P is typically derived from the cross product of dPdu and dPdv. Attention must be paid to these vectors' orientations to ensure the cross-product results in a vector that extends outward from the surface, not inward. Knowing the directional orientation of these vectors allows for the application of the right-hand rule to ascertain the proper order for the cross product, ensuring the normal points away from the surface (refer to chapter 3 Math Operations on Points and Vectors for more).

Designating the normal N as the up vector, the tangent as the right vector, and the bi-tangent as the forward vector, these vectors can be arranged as rows in a [4x4] matrix as follows:

$$ \begin{bmatrix} T_x&T_y&T_z&0\\ N_x&N_y&N_z&0\\ B_x&B_y&B_z&0\\ 0&0&0&1 \end{bmatrix} $$

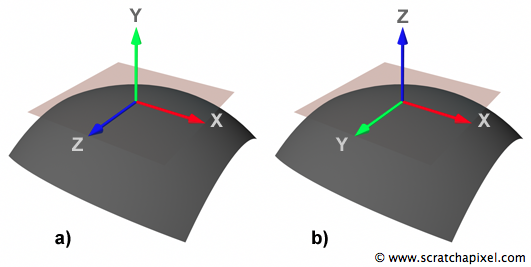

When integrating this matrix within your code, it's crucial to be mindful of the specific context in which it's being used. For instance, certain portions of the code, particularly those related to shading, might adopt a different convention where the z-axis is treated as the up vector. This approach is often detailed in discussions on Spherical Coordinates, necessitating a reorganization of the matrix rows as follows to align with this shading-oriented convention:

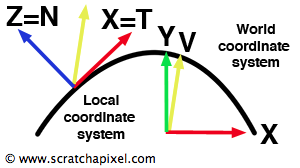

$$ \begin{bmatrix} T_x&T_y&T_z&0\\ B_x&B_y&B_z&0\\ N_x&N_y&N_z&0\\ 0&0&0&1 \end{bmatrix} $$This adjustment positions the normal vector's coordinates on the matrix's third row, adhering to the shading-centric convention of aligning the surface normal with the z-axis. While this alignment might not be immediately intuitive, it is a widely accepted standard within the shading domain, compelling us to adopt it for consistency's sake. As depicted in Figure 4, this convention contrasts with the world coordinate system, where the y-vector typically represents the up vector, by designating the z-vector as the up vector within the local coordinate system.

It's important to note that if your work involves a column-major order convention—contrary to Scratchapixel's row-major order—the vectors must be represented as columns rather than rows. This means that for a z-vector serving as the up vector, the coordinates of T would populate the first column, B's coordinates the second, and N's coordinates the third.

What purpose does this matrix serve? By applying it to a vector—\(v\), for example, situated in world space—this matrix transitions \(v\) into \(v_M\), with its coordinates now defined relative to the locally constructed coordinate system anchored by \(N\), \(T\), and \(B\). The absence of a translation component in this matrix's fourth row underscores its designation as an orientation matrix, aimed exclusively at vector manipulation. This feature proves invaluable in shading applications, where vector orientation in relation to the surface normal—typically aligned with the y- or z-axis by convention—greatly influences the computational process determining an object's color at the ray intersection point, as illustrated in Figure 4. This shading methodology will be explored comprehensively in the forthcoming lesson on Shading.

Affine Space: Certain rendering platforms, like Embree from Intel, favor an affine space representation for matrices and transformations. This approach delineates a Cartesian coordinate system through a specific space location (denoted as O) and three directional axes (Vx, Vy, Vz).

Code

Here is an implementation of these concepts. Assuming that the vector v1 represents the z-axis in a right-handed coordinate system (and/or the shading normal \( N \)), according to the following code snippet:

void CoordinateSystem(const Vec3f& v1, Vec3f& v2, Vec3f& v3) {

if (std::fabs(v1.x) > std::fabs(v1.y)) {

float inv_len = 1 / std::sqrt(v1.x * v1.x + v1.z * v1.z);

v2 = Vec3f(v1.z * inv_len, 0, -v1.x * inv_len);

}

else {

float inv_len = 1 / std::sqrt(v1.y * v1.y + v1.z * v1.z);

v2 = Vec3f(0, -v1.z * inv_len, v1.y * inv_len);

}

v3 = v1.Cross(v2);

}

-

For \( N = \text{z-axis} \), which is \( (0, 0, 1) \):

-

The condition \( \vert N.x \vert > \vert N.y \vert \) is false (since \( N.x = 0 \) and \( N.y = 0 \)), so it goes to the else branch.

-

Therefore, \( Nt = \text{Vec3f}(0, -N.z, N.y) / \sqrt{N.y^2 + N.z^2} \).

-

Substitute \( N = (0, 0, 1) \):

-

\( Nt = \text{Vec3f}(0, -1, 0) \).

-

-

Then, compute \( Nb = N \times Nt \):

-

\( Nb = (0, 0, 1) \times (0, -1, 0) = (1, 0, 0) \).

-

-

Therefore, if \( N \) represents the z-axis in a right-handed coordinate system, after executing CoordinateSystem(v1, v2, v3), you would expect:

-

v2to be \( Nt \) (tangent or y-axis direction in the new system) to be aligned with the negative y-axis direction: \( (0, -1, 0) \). -

v3to be \( Nb \) (bitangent or x-axis direction in the new system) to be aligned with the x-axis direction: \( (1, 0, 0) \).

This transformation rotates the coordinate system so that the z-axis of the original system aligns with \( N \), and \( Nt \) and \( Nb \) form an orthonormal basis with \( N \) as the normal vector.

If you use a left-hand coordinate system, use the following code instead:

void CoordinateSystem(const Vec3f& v1, Vec3f& v2, Vec3f& v3) {

if (std::fabs(v1.x) > std::fabs(v1.y)) {

float inv_len = 1 / std::sqrt(v1.x * v1.x + v1.z * v1.z);

v2 = Vec3f(-v1.z * inv_len, 0, v1.x * inv_len);

}

else {

float inv_len = 1 / std::sqrt(v1.y * v1.y + v1.z * v1.z);

v2 = Vec3f(0, v1.z * inv_len, -v1.y * inv_len);

}

v3 = v1.Cross(v2);

}